The Pitch: Presenting Voice Kanban

September 23-27, 2025. The moment to present Voice Kanban. My professor, unknown stakeholders watching. Dana, Chris, Ted, Oliver—four personas, six task types, three intelligence layers. Everything on the line.

The Setup

One slide deck, four personas, and a vision: "Voice-first task orchestration that turns specialists into T-shaped team members by detecting bottlenecks and suggesting cross-training opportunities."

My professor's guidance echoed in my head: "Hide complexity inside simplicity. Show me the outcome, not the architecture."

The Problem Statement

I opened with a scenario: It's 2 AM. Production crashed. Dana found the error in logs but can't fix it. Chris could fix it but doesn't have deployment access. Oliver has access but doesn't understand the codebase.

Three capable people, all blocked by role boundaries. Sequential handoff waste. Toyota solved this in 1956. We're still doing it wrong in 2025.

The Solution: Three Intelligence Layers

The core innovation—not the voice interface itself, but what it enables:

- Domain-aware routing: Understanding that "authentication bug" needs someone with both debugging skills and security knowledge

- Flow-conscious queueing: WIP limits that actually work—max 3 tasks per person, 8 across the team. When queues back up, the system notices

- Context linkage with cross-training: When testing queues overflow and coding is idle, suggest adjacent tasks. Turn bottlenecks into learning opportunities

The Personas

I introduced each team member as a real person with real constraints:

- Dana (Designer): Expert in Figma, learning CSS. Wants to understand how designs get implemented

- Chris (Coder): Backend wizard, weak on testing. Doesn't understand why design details matter

- Ted (Tester): QA specialist, curious about automation. Wants to understand the code he's testing

- Oliver (Ops): DevOps guru, doesn't talk to users. Fixes infrastructure but doesn't understand business requirements

Four silos. Six task types to bridge them.

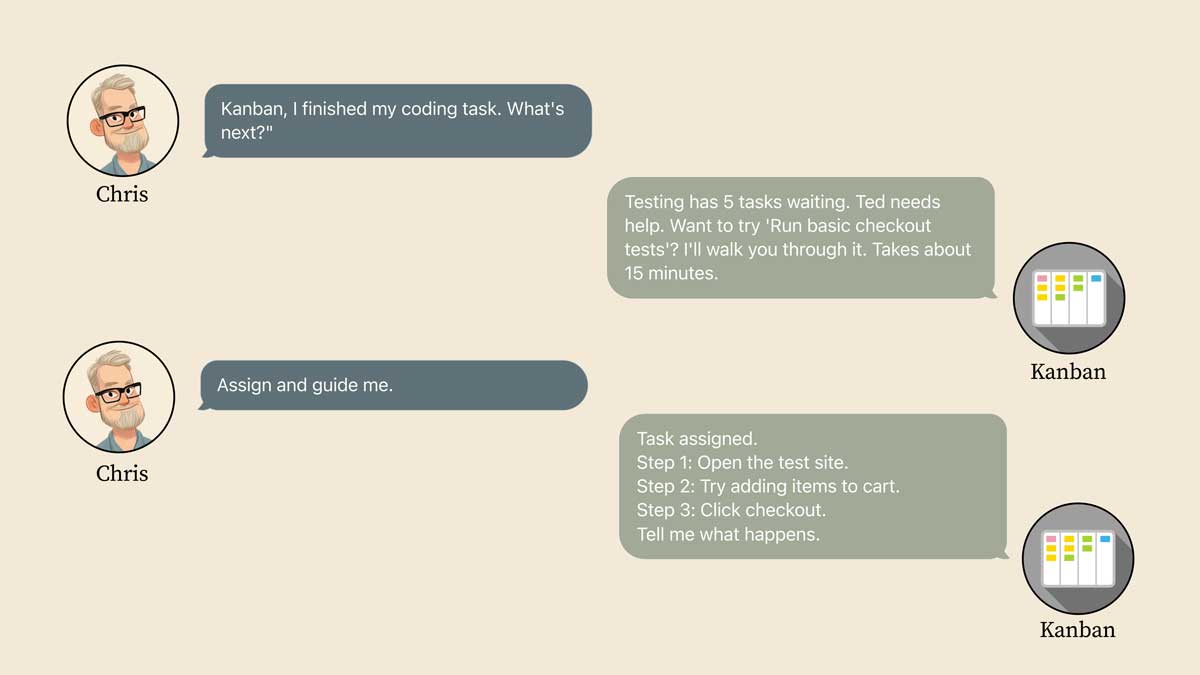

The Voice Interaction Vision

I walked through the intended workflow:

"Show me current bottlenecks" → System identifies queue backups and suggests actions

"What can Dana learn while waiting?" → System suggests adjacent tasks based on her design work and current capacity

Voice commands making task management so frictionless that cross-training becomes natural.

The Technical Stack

I kept the architecture clear:

- Voice Layer: OpenAI Whisper Large V3

- Intelligence Layer: Claude API for routing logic

- Integration Layer: MCP servers to GitHub/Trello/Jira

- Orchestration: HP AI Studio as central hub

The innovation isn't in any single component. It's in how they combine to help teams break down silos.

The 20-Week Roadmap

I presented the phase-based development plan:

- Weeks 1-3: Research, pitch, ethics framework

- Weeks 4-8: Local setup, voice processing, basic routing

- Weeks 9-14: MCP integration, assignment algorithms, flow strategies

- Weeks 15-18: Cross-training suggestions, bottleneck detection, refinement

- Weeks 19-20: Final testing, documentation, presentation

The Feedback

My professor listened carefully. The stakeholders watched. When I finished, the response was direct.

"You need to think through your development plan more."

Not a rejection. A challenge. The vision was sound, but the execution path needed more detail. How would I handle integration failures? What were the fallback options? Where were the buffer zones?

The Reality Check

Walking out, I understood: having a compelling vision isn't enough. I needed a realistic roadmap that accounted for the unknowns.

MCP integration could fail. Voice processing could be harder than expected. APIs might not work as documented. The professor was right—I needed contingency plans.

But I had approval to move forward. First step: the ethics framework. Before writing any code, I needed to think through the implications of building a system that makes decisions about people's work.

The pitch was done. Now came the hard part: actually building it.