Midterm Ethics Review: The Framework

Early October: Before writing a single line of code, we had to answer the hard questions. Data privacy, bias mitigation, worker autonomy. Technical decisions are ethical decisions.

Why Ethics First?

Professor Bartlett made this clear from day one: you don't bolt ethics onto a project after it's built. You design with ethics from the foundation.

Voice Kanban collects voice data, routes tasks based on AI decisions, and potentially tracks productivity patterns. Every one of those capabilities has ethical implications. So before HP AI Studio. Before Whisper integration. Before a single Git commit.

We needed a framework.

The Four Pillars

The midterm ethics review structured around four critical areas:

1. Data & Privacy Ethics

The Question: What data does Voice Kanban collect, and how does it impact user privacy?

The Reality: Every voice command creates a traceable digital footprint. Audio travels from device → speech API (Google/AWS) → NLU service → GitHub API → analytics platforms. Each node generates logs with timestamps, IP addresses, user identifiers, and query content.

The Risk: Once audio reaches Google's Speech API, it operates under Google's privacy policy. True deletion becomes technically unverifiable. Voice recordings inadvertently capture sensitive disclosures that become permanently searchable.

Decision: Implement granular consent mechanisms and local processing options wherever possible.

2. Bias & Fairness

The Question: How might Voice Kanban's task routing algorithms perpetuate or amplify existing biases?

The Reality: AI doesn't create bias from nothing—it learns from historical patterns. If past data shows women assigned more documentation tasks and men more coding tasks, the algorithm will reinforce these patterns unless explicitly prevented.

The Risk: Voice recognition systems historically perform worse for non-native speakers, people with accents, and women. Task assignment based on "past performance" could trap junior developers in low-skill work.

Decision: Regular bias audits, skill equity tracking, and manual override capabilities for all AI suggestions.

3. Transparency & Explainability

The Question: When the system suggests a task, can users understand why?

The Reality: "Black box" AI decisions erode trust. If Ted gets assigned a debugging task and doesn't know why, he can't evaluate whether it's appropriate for his skill level or learning goals.

The Solution: Every task suggestion includes visible reasoning: "Suggested because: (1) You have experience with authentication systems, (2) Testing queue is backed up, (3) Task complexity matches your skill level."

Decision: No hidden AI decisions. All routing logic must be explainable in plain language.

4. Autonomy & Human Agency

The Question: Does Voice Kanban empower workers or enable surveillance?

The Reality: The workplace power dynamic makes consent inherently coercive. Employees may feel pressured to adopt voice tools knowing refusal signals technological resistance. Managers gain access to detailed records of what tickets employees query, when they work, and what they prioritize.

The Design Principle: AI should suggest, never command. Workers must retain final authority over task selection, work pace, and cross-training decisions.

Decision: All AI suggestions are optional. No productivity metrics derived from voice command frequency. No manager dashboards showing individual worker patterns.

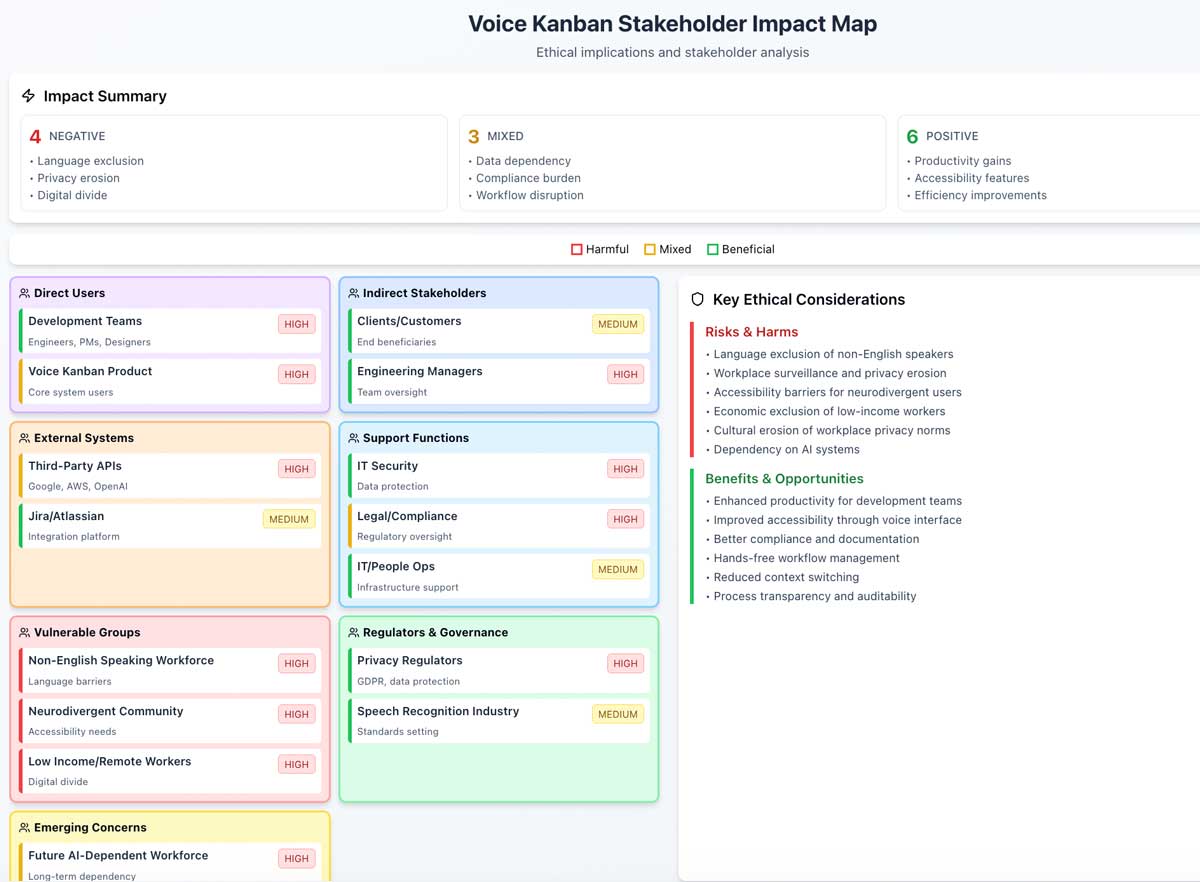

The Stakeholder Impact Map

The visual at the top of this post maps every stakeholder group affected by Voice Kanban, color-coded by impact severity:

- Red (High Negative): Low-income remote workers who lack quality microphones, non-native speakers facing voice recognition bias, future AI-dependent workforce losing manual skills

- Yellow (Medium): Workplace privacy concerns, third-party data handlers, engineering managers balancing oversight with trust

- Green (High Positive): Development teams breaking down silos, engineering managers getting real-time bottleneck visibility, remote-first companies enabling async voice workflows

The center circle represents direct users: developers, designers, testers, product managers, UX designers. The middle ring shows indirect stakeholders: HR, operations, engineering managers. The outer ring captures broader societal impacts.

Consent Mechanisms

Generic "I agree" checkboxes aren't enough. Informed consent requires:

- Voice Recording Consent: Explicit acknowledgment that speech becomes audio files, with real-time recording indicators (visual red dot, audio tone) and clear start/stop boundaries

- Third-Party Processing Consent: Specific disclosure naming each provider (e.g., "Google Cloud Speech API will transcribe your audio"), listing each individually with their data retention policies

- Action Scope Consent: Separate permissions for read vs write operations (viewing tasks vs creating them), project-level access controls, and team-wide vs personal data access

- Revocability: One-click consent withdrawal, data deletion within 30 days, export of all personal data before deletion

The Amazon Alexa Parallel

Amazon's Alexa faced multiple privacy controversies: human contractors listening to voice recordings, unclear data retention policies, law enforcement requests for audio files as evidence.

Microsoft's Workplace Analytics tool tracking employee communication patterns led to concerns about surveillance capitalism in professional settings.

Voice Kanban operates in the same landscape. The difference? We're designing with these cautionary tales in mind rather than discovering them post-launch.

Technical Decisions Are Ethical Decisions

Here's what this framework means in practice:

- Choosing OpenAI Whisper over cloud APIs means local processing options, reducing third-party data exposure

- Designing weighted scoring algorithms with visible factors means users can evaluate fairness

- Building optional voice commands instead of voice-only interfaces preserves accessibility for non-speakers

- Implementing WIP limits prevents AI-driven overwork even if the system could technically assign more tasks

- Refusing to track command frequency as a productivity metric protects worker autonomy

What I Learned

Ethics isn't a checkbox. It's not a final section of documentation. It's a lens through which you evaluate every design decision.

Should this feature collect data? Should this algorithm make automatic decisions? Should this dashboard show manager-level analytics?

The answer isn't always "no." But the answer requires systematic consideration of impact, consent, bias, and power dynamics.

Before writing code, I had to write this framework. Before building the system, I had to map its stakeholders. Before enabling voice commands, I had to design consent mechanisms.

This wasn't bureaucracy. This was the foundation that makes Voice Kanban worth building.

The Commitments

The midterm ethics review concluded with specific commitments:

- No productivity surveillance—voice command frequency never becomes a performance metric

- Skill equity auditing—quarterly reviews to ensure task routing doesn't perpetuate bias

- Local processing options—workers can choose Whisper local over cloud APIs

- Explainable AI—every suggestion includes visible reasoning

- Worker consent—granular permissions, revocable at any time

- Anti-scope-creep protection—no feature additions that violate these principles

These aren't aspirations. They're design constraints. Voice Kanban succeeds only if it respects these boundaries.

Technical decisions are ethical decisions. This framework ensures I remember that with every line of code.